𝐃𝐞𝐜𝐞𝐧𝐭𝐫𝐚𝐥𝐢𝐳𝐞𝐝 𝐀𝐈: 𝐒𝐢𝐱 𝐔𝐧𝐝𝐞𝐫 𝐓𝐡𝐞 𝐑𝐚𝐝𝐚𝐫 𝐎𝐩𝐞𝐫𝐚𝐭𝐨𝐫𝐬 𝐑𝐞𝐰𝐢𝐫𝐢𝐧𝐠 𝐂𝐨𝐦𝐩𝐮𝐭𝐞, 𝐏𝐫𝐢𝐯𝐚𝐜𝐲, 𝐀𝐧𝐝 𝐎𝐰𝐧𝐞𝐫𝐬𝐡𝐢𝐩

Web3 has no shortage of noise, yet the work that matters is happening where teams solve stubborn infrastructure gaps:

• Verifiable Compute,

• Data Sovereignty, and

• Aligned incentives.

Below is a field guide to six such builders whose traction already hints at the next step-change for AI.👇

---

Numerai (@numerai ): Crowd Intelligence, Collateralized

San Francisco’s Numerai turns a global data-science tournament into a live hedge fund. Contributors submit encrypted predictions and stake $NMR. The protocol aggregates them into a single meta-model and automatically sizes positions in US equities. Payouts track real-world PnL, while poor models forfeit their stake, creating what founder @richardcraib calls “skin-in-the-math.” Numerai has now raised approximately $32.8M, with over $150M in staked NMR, and distributes six-figure sums in weekly rewards to thousands of pseudonymous quants.

---

Gensyn (@gensynai ): Proof-of-learning at Cloud Scale

GPU markets are distorted, but Gensyn sidesteps the roadblocks by recruiting any idle hardware and verifying the work with optimistic checks and zero-knowledge “proof-of-learning.” Developers submit a training job, peers do the heavy lifting, and correctness is settled on-chain before payment clears. The London crew banked roughly $43M from a16z crypto and others and is targeting LLM fine-tuning, where compute is both scarce and expensive.

---

MyShell (@myshell_ai ): User-owned Agents As Digital Goods

MyShell gives creators a no-code studio to build voice assistants, game NPCs, or productivity bots, then package them as NFTs and earn $SHELL token royalties when others deploy or remix them. The project has attracted over 1M+ users and $16.6M in funding, as a consumer-facing layer for personalised AI that is portable across apps. No API key, no gatekeeper.

---

(@flock_io ): Federated Learning For Privacy-Preserving Models

In sectors where data can never leave the device, such as hospitals or smart-factory sensors, FLock orchestrates small-language-model training across thousands of nodes. Each update is verified with ZK-proofs before it is embedded into the global model, and contributors earn $FLO tokens in proportion to proven utility. A fresh $3M round led by DCG brings total funding to $11M and supports pilots in medical imaging and industrial IoT.

---

Ritual (@ritualnet ): A sovereign L1 for AI Workloads

Ritual is building a layer 1 where models live as smart contracts, versioned, governable, and upgradeable via token voting. Off-chain executors handle the heavy maths, feed results back on-chain, and collect fees. The design promises fault isolation if a model malfunctions so that governance can roll it back without halting the network. Investors have backed the thesis with a $25M Series A.

---

Sahara AI (@SaharaLabsAI ): Agents With Shared Memory

Sahara deploys autonomous agents onto a peer-to-peer substrate and stores their evolving knowledge graphs on-chain, so that any reasoning step is auditable. Contributors who upload high-quality facts earn token rewards, improving the graph and the agents that rely on it. The company has secured about $49M, including a Pantera-led Series A, and is running early supply-chain analytics pilots where opaque vendor data previously stalled AI adoption.

---

Strategic Signals

• Cost Pressure Over Hype: Each project bends unit economics in its favour. @numerai by externalizing R&D, @gensynai by arbitraging unused silicon, @flock_io by eliminating data-migration costs.

• Verifiability As Moat: Zero-knowledge attestation, staking, or on-chain audit logs convert trust into math, discouraging copy-cats without similar research depth.

• Composable Edges: @myshell_ai’s agent NFTs can plug straight into Ritual’s execution layer or consume data from Sahara’s graphs, at a stack where provenance travels with the model.

---

Risk log

Token incentive standards, throughput limits for proofs, and an incumbent cloud ready to match prices all loom large.

The hedge: Back teams whose roadmaps migrate gradually from off-chain to on-chain primitives and who measure success in solved business problems, not token charts.

---

Takeaway

Decentralized AI will not arrive with one flagship chain. It will seep in through practical wins like cheaper training cycles, crowd-sourced alpha signals, and privacy-preserving deployments. The builders above are already selling those wins. Track their metrics, not their memes, and you will see the curve before it becomes consensus.

Thanks for reading!

AI Centralization vs Decentralization: What’s Worth Playing?

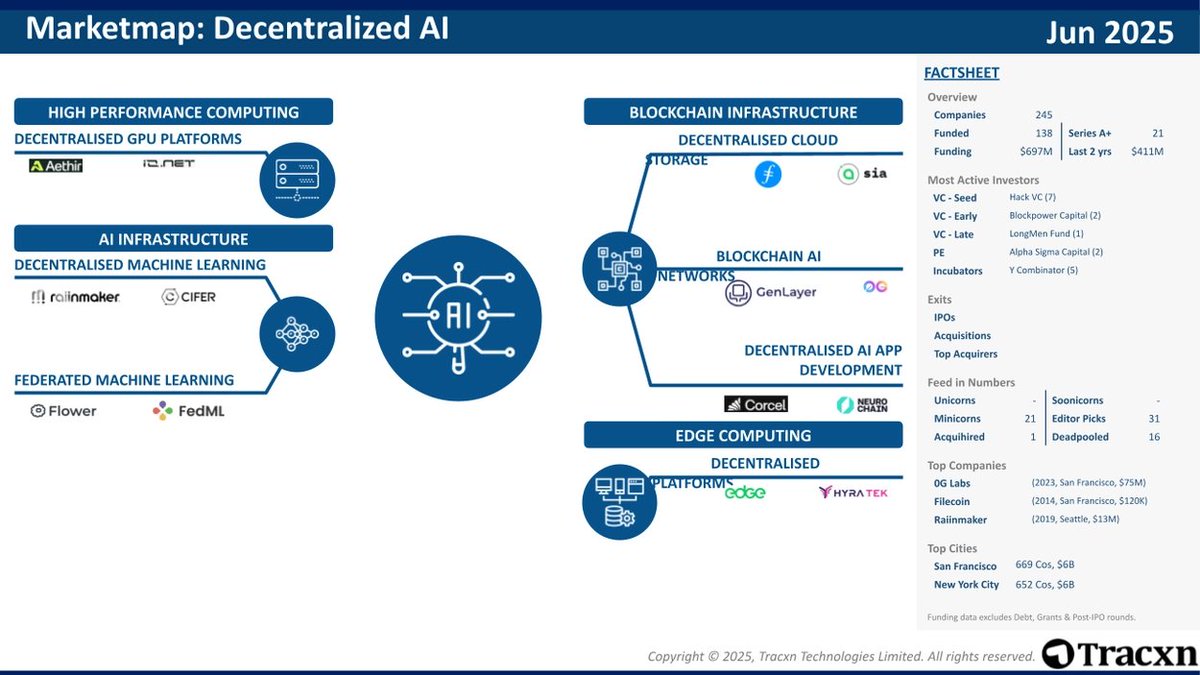

Imagine two arenas: one is dominated by tech giants running massive data centers, training frontier models, and setting the rules. The other distributes compute, data, and decision-making across millions of miners, edge devices, and open communities. Where you choose to build or invest depends on which arena you believe will capture the next wave of value, or whether the true opportunity lies in bridging both.

---

What Centralization and Decentralization Mean in AI

Centralized AI is primarily found in hyperscale cloud platforms like AWS, Azure, and Google Cloud, which control the majority of GPU clusters and hold a 68% share of the global cloud market. These providers train large models, keep weights closed or under restrictive licenses (as seen with OpenAI and Anthropic), and use proprietary datasets and exclusive data partnerships. Governance is typically corporate, steered by boards, shareholders, and national regulators.

On the other hand, Decentralized AI distributes computation through peer-to-peer GPU markets, such as @akashnet_ and @rendernetwork, as well as on-chain inference networks like @bittensor_. These networks aim to decentralize both training and inference.

---

Why Centralization Still Dominates

There are structural reasons why centralized AI continues to lead.

Training a frontier model, say, a 2-trillion parameter multilingual model, requires over $500M in hardware, electricity, and human capital. Very few entities can fund and execute such undertakings. Additionally, regulatory obligations such as the US Executive Order on AI and the EU AI Act impose strict requirements around red-teaming, safety reports, and transparency. Meeting these demands creates a compliance moat that favors well-resourced incumbents. Centralization also allows for tighter safety monitoring and lifecycle management across training and deployment phases.

---

Centralized Model Cracks

Yet this dominance has vulnerabilities.

There’s increasing concern over concentration risk. In Europe, executives from 44 major companies have warned regulators that the EU AI Act could unintentionally reinforce US cloud monopolies and constrain regional AI development. Export controls, particularly US-led GPU restrictions, limit who can access high-end compute, encouraging countries and developers to look toward decentralized or open alternatives.

Additionally, API pricing for proprietary models has seen multiple increases since 2024. These monopoly rents are motivating developers to consider lower-cost, open-weight, or decentralized solutions.

---

Decentralized AI

We have on-chain compute markets such as Akash, Render, and @ionet that enable GPU owners to rent out unused capacity to AI workloads. These platforms are now expanding to support AMD GPUs and are working on workload-level proofs to guarantee performance.

Bittensor incentivizes validators and model runners through $TAO token. Federated learning is gaining adoption, mostly in healthcare and finance, by enabling collaborative training without moving sensitive raw data.

Proof-of-inference and zero-knowledge machine learning enable verifiable model outputs even when running on untrusted hardware. These are foundational steps for decentralized, trustless AI APIs.

---

Where the Economic Opportunity Lies

In the short term (today to 18 months), the focus is on application-layer infrastructure. Tools that allow enterprises to easily switch between OpenAI, Anthropic, Mistral, or local open-weight models will be valuable. Similarly, fine-tuned studios offering regulatory-compliant versions of open models under enterprise SLAs are gaining traction.

In the medium term (18 months to 5 years), decentralized GPU networks would spiral in as their token prices reflect actual usage. Meanwhile, Bittensor-style subnetworks focused on specialized tasks, like risk scoring or protein folding, will scale efficiently through network effects.

In the long term (5+ years), edge AI is likely to dominate. Phones, cars, and IoT devices will run local LLMs trained through federated learning, cutting latency and cloud dependence. Data-ownership protocols will also emerge, allowing users to earn micro-royalties as their devices contribute gradients to global model updates.

---

How to Identify the Winners

Projects likely to succeed will have a strong technical moat, solving problems around bandwidth, verification, or privacy in a way that delivers orders of magnitude improvements. Economic flywheels must be well-designed. Higher usage should fund better infrastructure and contributors, not just subsidize free riders.

Governance is essential. Token voting alone is fragile, look instead for multi-stakeholder councils, progressive decentralization paths, or dual-class token models.

Finally, ecosystem pull matters. Protocols that integrate early with developer toolchains will compound adoption faster.

---

Strategic Plays

For investors, it may be wise to hedge, holding exposure to both centralized APIs (for stable returns) and decentralized tokens (for asymmetric upside). For builders, abstraction layers that allow real-time switching between centralized and decentralized endpoints, based on latency, cost, or compliance, is a high-leverage opportunity.

The most valuable opportunities may lie not at the poles but in the connective tissue: protocols, orchestration layers, and cryptographic proofs that allow workloads to route freely within both centralized and decentralized systems.

Thanks for reading!

934

18

The content on this page is provided by third parties. Unless otherwise stated, OKX is not the author of the cited article(s) and does not claim any copyright in the materials. The content is provided for informational purposes only and does not represent the views of OKX. It is not intended to be an endorsement of any kind and should not be considered investment advice or a solicitation to buy or sell digital assets. To the extent generative AI is utilized to provide summaries or other information, such AI generated content may be inaccurate or inconsistent. Please read the linked article for more details and information. OKX is not responsible for content hosted on third party sites. Digital asset holdings, including stablecoins and NFTs, involve a high degree of risk and can fluctuate greatly. You should carefully consider whether trading or holding digital assets is suitable for you in light of your financial condition.