不打算重新引入 @OpenledgerHQ,如果你一直在關注,你已經明白了。如果您還沒有,請繼續閱讀下面的👇帖子

但大多數人仍然沒有意識到:

這不僅僅是一些帶有噱頭的“Web3 AI 農場”。

@OpenledgerHQ 正在悄悄地解決當今 AI 的核心問題:模型與現實世界的激勵措施保持一致。

想想吧。。。

今天的 AI 是建立在從未選擇加入且從未獲得報酬的人的數據之上的。

然後它會輸出你無法審計、無法追蹤的東西,除非你是大型科技公司,否則絕對無法貨幣化。

這就是為什麼每周都會有新的訴訟,不是因為 AI 不好,而是因為系統從源頭上崩潰了。

OpenLedger 不僅解決了這個問題。

它構建了一個協定,其中:

▸ 署名是預設的

▸ 獎勵是自動的

▸ 您的數據越有用,您賺的就越多

GitHub 為開源開發人員所做的,OpenLedger 為開放數據貢獻者所做的。

您可以上傳、微調、提示,甚至只是策劃。

系統處理歸因、跟蹤模型使用方式並向您付款。

全部在鏈上。沒有中間商。

這是大多數人都在睡覺的部分,>您無需成為 AI 研究人員即可參與。

您可以構建數據集、構建邏輯、優化輸出,並且仍然可以賺錢。

如果您只是為了 Kaito 或 Cookie 獎勵而來,那很好。

但真正的Alpha是什麼?

OpenLedger 正在為新的 AI 經濟構建軌道,在這個經濟中,協定知道誰貢獻了什麼,並相應地進行價值流動。

如果下一個價值 10 億美元的模型是在鏈上訓練的,那將是因為這個系統使之成為可能。

貢獻 → 歸因 → 獎勵。

這不是農業。

它是基礎。

We’ve made language models sound like magic, but no one wants to talk about the messy, secretive pipelines underneath.

Billions of data points scraped. Zero accountability.

Outputs generated. No receipts.

And when the AI says something wrong, offensive, or outright plagiarized?

We shrug. “The model learned that somewhere.”

The problem has a name: The Black Box.

And it’s starting to cost us.

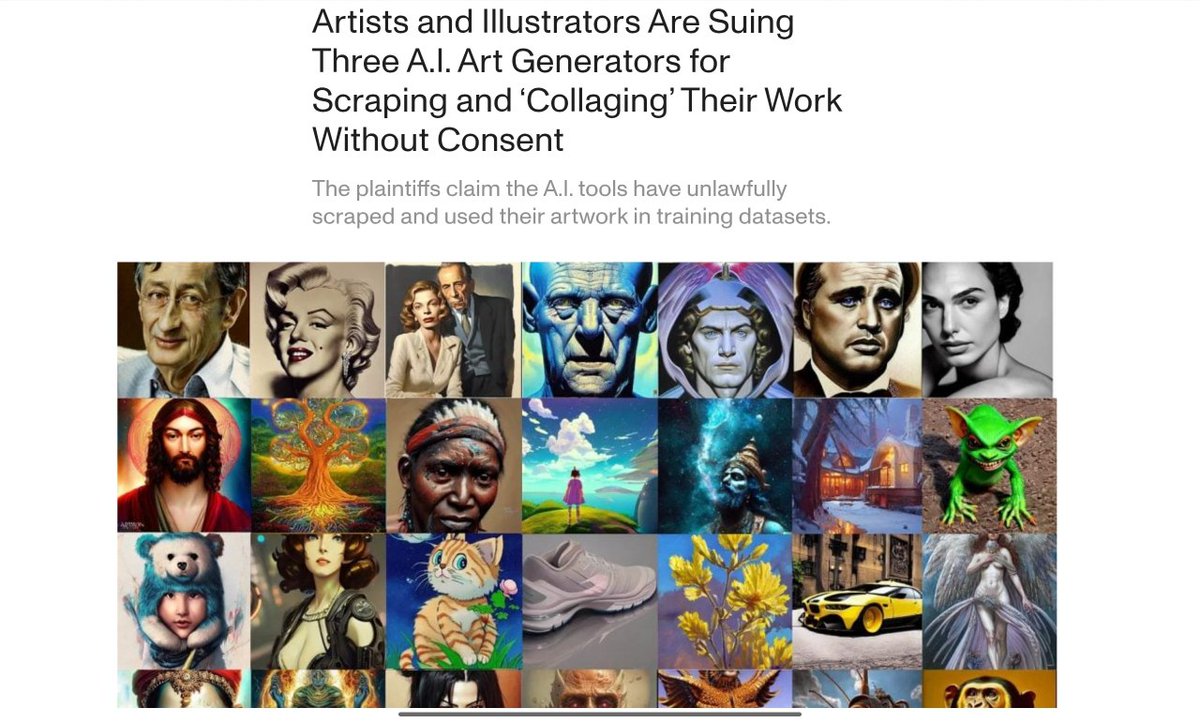

Remember when artists across DeviantArt, ArtStation, and Behance discovered their portfolios were used, without consent, to train AI image models?

Entire art styles copied. Signatures warped. Commercial outputs that looked eerily identical to original works.

Thousands of creators filed petitions, joined class-action lawsuits, and demanded regulation.

Their question was simple: “Where did the model get this from?”

But the answer was murky.

Because the truth is: most AI companies can’t explain what data shaped what output. The training pipelines are opaque by design. No logs. No attribution. No ownership.

That’s what breaks trust, and stifles accountability.

But what if we built AI differently?

What if we could track every contribution, reward high-quality data, and hold models accountable, from the bottom up?

That’s the mission of @OpenledgerHQ, a decentralized AI network where attribution is built into the protocol itself.

At the core is something called Proof of Attribution (PoA).

Think of it as the opposite of a black box.

PoA ensures every AI output can be traced back to the data that shaped it.

It cryptographically links training data, model behavior, and contributor identity, all stored transparently on-chain.

This means:

» You can verify who added what data

» Contributors get rewarded based on impact

» Malicious or low-quality inputs can be penalized

» AI responses gain explainability, a critical step in safety and trust

The @OpenledgerHQ testnet is already live, and unlike most, it’s not just a “demo.”

You can:

▸ Create your own Datanet

▸ Fine-tune or interact with AI models

▸ Track which data shaped the responses

▸ Claim rewards through their Yapper leaderboard

▸ No GPU or ML experience required

All interactions are on-chain. No mystery. No magic. Just real, accountable AI.

In a time when big players are locked in billion-dollar lawsuits and public distrust is growing, OpenLedger is building something radically different:

> 𝐴 𝑓𝑢𝑡𝑢𝑟𝑒 𝑤ℎ𝑒𝑟𝑒 𝑑𝑎𝑡𝑎 𝑖𝑠 𝑐𝑜𝑚𝑚𝑢𝑛𝑖𝑡𝑦-𝑜𝑤𝑛𝑒𝑑.

> 𝐴 𝑓𝑢𝑡𝑢𝑟𝑒 𝑤ℎ𝑒𝑟𝑒 𝐴𝐼 𝑚𝑜𝑑𝑒𝑙𝑠 𝑎𝑟𝑒 𝑜𝑝𝑒𝑛, 𝑡𝑟𝑎𝑛𝑠𝑝𝑎𝑟𝑒𝑛𝑡, 𝑎𝑛𝑑 𝑎𝑢𝑑𝑖𝑡𝑎𝑏𝑙𝑒.

> 𝐴 𝑓𝑢𝑡𝑢𝑟𝑒 𝑤ℎ𝑒𝑟𝑒 𝑐𝑜𝑛𝑡𝑟𝑖𝑏𝑢𝑡𝑜𝑟𝑠 𝑎𝑟𝑒 𝑛𝑜𝑡 𝑒𝑥𝑝𝑙𝑜𝑖𝑡𝑒𝑑, 𝑏𝑢𝑡 𝑒𝑚𝑝𝑜𝑤𝑒𝑟𝑒𝑑.

So, while others are hiding behind NDAs and closed weights, OpenLedger is busy making proof the new default.

Just open AI, by design.

Want to see it in action?

Epoch 2 of the testnet is live.

No gatekeeping. No GPU. Just your curiosity and a wallet.

OPENLEDGER 🟠

Transparent AI. Owned by everyone.

1,678

16

本頁面內容由第三方提供。除非另有說明,OKX 不是所引用文章的作者,也不對此類材料主張任何版權。該內容僅供參考,並不代表 OKX 觀點,不作為任何形式的認可,也不應被視為投資建議或購買或出售數字資產的招攬。在使用生成式人工智能提供摘要或其他信息的情況下,此類人工智能生成的內容可能不準確或不一致。請閱讀鏈接文章,瞭解更多詳情和信息。OKX 不對第三方網站上的內容負責。包含穩定幣、NFTs 等在內的數字資產涉及較高程度的風險,其價值可能會產生較大波動。請根據自身財務狀況,仔細考慮交易或持有數字資產是否適合您。