One day my friend told me he was going to quit his job, and his reason was particularly righteous: "The boss is too crazy, doing the work of three people, holding meetings every day and loves to talk about [empowerment] [closed loop] [building agile teams]."

I asked him what he would do after quitting. He said he was planning to create an automated website, let AI write content, run affiliate marketing, and set up a Telegram group for subscriptions.

The era of AI as a tool is about to change. It is starting to become agents that can run tasks, generate business, and sign contracts on their own. If the previous AI was an employee, now the AI wants to be the boss. And @Mira_Network is like that old man sitting under the shade of a tree at the entrance of an old Beijing alley, not showing off, but whenever a kid fights or a family has a quarrel, the neighbors can't avoid him.

You can't see him giving orders, but you can feel his presence. This position is like what Mira does with its "verification network"; whether you are an AI employee on the chain or a smart agent that can run automated trading, as long as you are out there talking, working, and signing, you can't avoid Mira giving you a "stamp of approval."

The recent Agent Framework collaborations announced look quite complex: some are about gaming, some about trading, and some about smart DAOs, but they all have a simple commonality—they need a trustworthy, verifiable foundation. It's not that we fear AI being dumb; we fear AI spouting nonsense.

What Mira provides is not a smarter brain, but a sense of order. Before any statement is made, it must be broken down into claims and sent into the verification network. Other models check to see if what you said is true. Only after passing can you continue; if not, it's like the old man at the alley shaking his hand: "Hey, don't talk nonsense, this isn't right."

This process sounds simple, but in reality, it's a tough engineering task. Because language generation is continuous, but verification is discrete. How do you break a sentence into verifiable chunks? How do you ensure the meaning is still correct? This is not just a game between models; it also tests language comprehension and engineering finesse.

Mira's role is also quite interesting. It doesn't loudly proclaim that it wants to make AI smarter or more general; instead, it quietly endorses each agent before it appears, ensuring that it isn't talking nonsense. The more AI starts to work on its own, run processes, and enter organizations, the more it needs a mechanism behind the scenes to take responsibility for it.

In the future, when there are really a bunch of AIs forming a DAO, having their own income, and collaborating between "employees" and "superiors," we might not mention Mira every day, just like no one thinks about DNS all the time. But you know in your heart that without this seemingly inconspicuous "verification network," the AI revolution wouldn't be able to get off the ground.

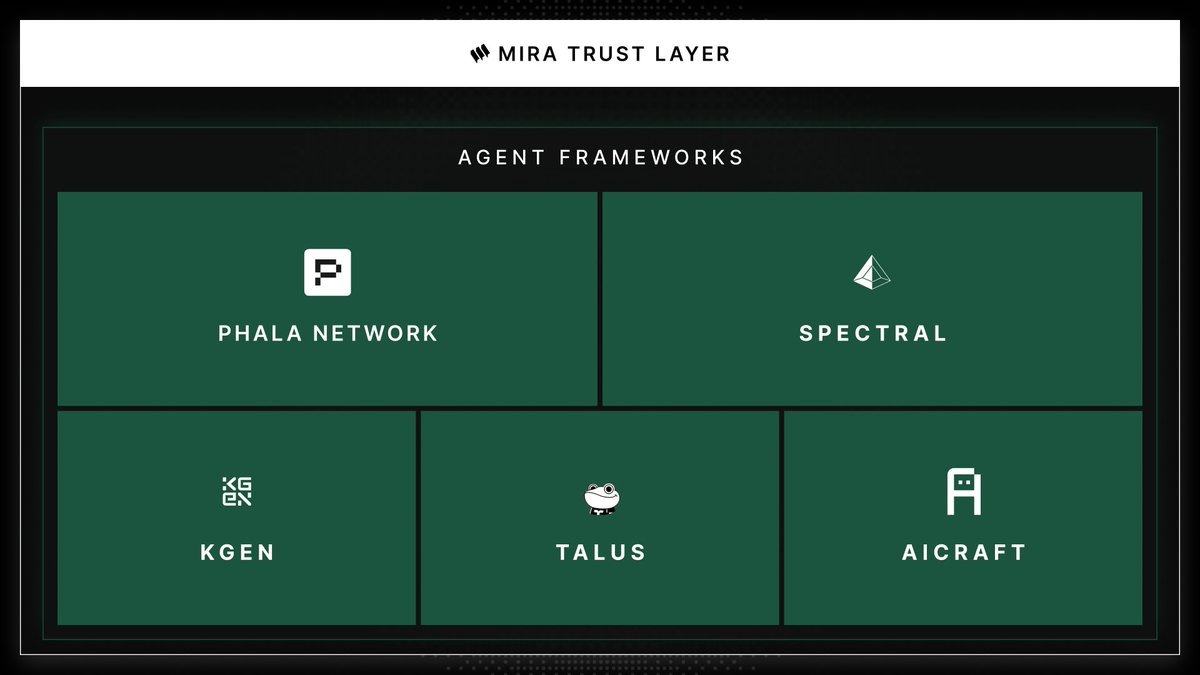

Ecosystem Spotlight: Agent Frameworks Layer (Part 2)

Reliable AI agents require verified intelligence. Our verification network powers the next generation of autonomous agents by ensuring their outputs are accurate, trustless, and error-resistant.

We provide the verification infrastructure that agent frameworks tap into to eliminate hallucinations and ensure autonomous operations can scale safely, without requiring human oversight.

We power agent frameworks from 👇

1. KGeN

@KGeN_IO brings proprietary gaming datasets into our verification network, enabling scalable, trustless evaluation of game-specific AI agents and mechanics. Together, we’re building the foundation for provable intelligence in gaming worlds.

2. Talus Network

@TalusNetwork is building the backbone of the Autonomous AI Economy, using our verification to support on-chain, income-generating AI agents with guaranteed reliability. Our work sets the stage for a decentralized labor force powered by agents you can trust.

3. AiCraft

@aicraftfun, a top 10 project on Monad Radar and featured by Bitcoin(.)com, is a launchpad for next-gen AI agents, now backed by our verification stack. We’re enabling the next wave of AI-native apps that prove what they do.

4. Spectral Labs

@Spectral_Labs enables users to build agentic companies and networks of autonomous agents that collaborate toward shared goals, all powered by our consensus mechanisms. This is the first step toward scalable, verifiable coordination between AI agents.

5. Phala Network

@PhalaNetwork integrates our system as an official model provider, delivering trustless inference and verifiable LLMs for its secure AI agent ecosystem. Together, we’re making private, provable AI the default across Web3.

By integrating with diverse frameworks ranging from gaming to social, from blockchain-native to multi-modal, we ensure verification travels with the agent, wherever it operates.

Stay tuned for more ecosystem deep dives!

6.28K

45

The content on this page is provided by third parties. Unless otherwise stated, OKX is not the author of the cited article(s) and does not claim any copyright in the materials. The content is provided for informational purposes only and does not represent the views of OKX. It is not intended to be an endorsement of any kind and should not be considered investment advice or a solicitation to buy or sell digital assets. To the extent generative AI is utilized to provide summaries or other information, such AI generated content may be inaccurate or inconsistent. Please read the linked article for more details and information. OKX is not responsible for content hosted on third party sites. Digital asset holdings, including stablecoins and NFTs, involve a high degree of risk and can fluctuate greatly. You should carefully consider whether trading or holding digital assets is suitable for you in light of your financial condition.