AI'S BIGGEST BOTTLENECK IS DATA MOVEMENT

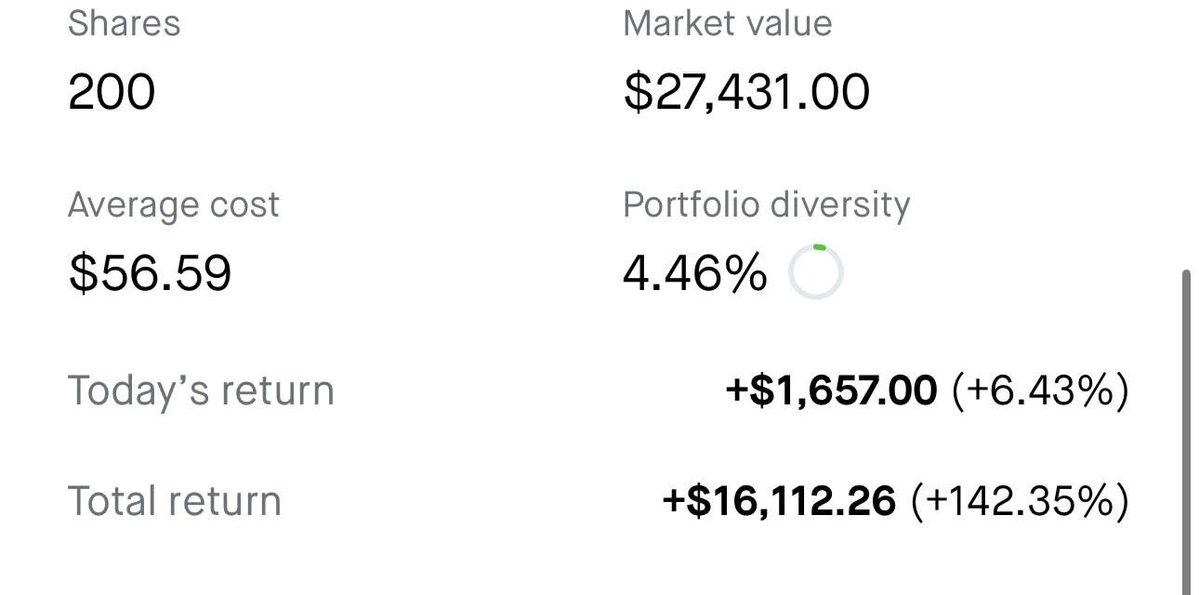

I've been saying it all year: $ALAB is going to be one of the biggest winners from AI inference -- the tax on AI workloads.

The reality is simple: data isn't moving fast enough between compute, memory & storage to keep up with real-time inference. And that gap only widens as models become more interactive, decentralised & dynamic.

Every major AI hardware player is adapting:

• $NVDA Grace Hopper -- built around memory coherence

• $AMD MI300 -- optimised for high-bandwidth interconnects

• $MSFT AI data centres -- designed with custom networking topologies

They're all converging on the same challenge: compute is now a connectivity problem -- Astera Labs is my favourite way to play that bottleneck.

Show original

61.1K

389

The content on this page is provided by third parties. Unless otherwise stated, OKX is not the author of the cited article(s) and does not claim any copyright in the materials. The content is provided for informational purposes only and does not represent the views of OKX. It is not intended to be an endorsement of any kind and should not be considered investment advice or a solicitation to buy or sell digital assets. To the extent generative AI is utilized to provide summaries or other information, such AI generated content may be inaccurate or inconsistent. Please read the linked article for more details and information. OKX is not responsible for content hosted on third party sites. Digital asset holdings, including stablecoins and NFTs, involve a high degree of risk and can fluctuate greatly. You should carefully consider whether trading or holding digital assets is suitable for you in light of your financial condition.