#OpenLedger is the "AI version of Ethereum + GitHub", making AI open-source, credible, and traceable, and everyone can participate and benefit.

Recently, after reading @OpenledgerHQ's "Proof of Attribution" white paper, I feel more and more that in the second half of #AI development, the issue of confirming rights in the whole process of #AI contribution will be the biggest pain point of traditional AI. #OpenLedger combines the current popular #AI + #Blockchain program to effectively solve the above pain points, and according to the @MessariCrypto research report, the #AI track will reach a market value of more than $2 trillion by 2030, of which the potential is self-evident, today we will analyse the #AI new dark horse #OpenLedger and the early 3 free participation opportunities.

At present, #AI are basically controlled by large companies (OpenAI, Google, Meta), how the model is trained, whose data is used, and how the revenue is distributed - it is completely a black box operation. Ordinary people can neither participate nor benefit.

#OpenLedger uses Proof of Attribution to enable #AI-generated content (e.g., images, articles, music) to be traced back to the source, and to ensure that all data providers who contribute to building professional AI models will be recognised or incentivised.

•How the model is trained → is publicly available

• Whose data is used → has a record and has credentials

•Whoever contributes data → can be tracked and rewarded

This is an underlying structure of "anti-monopoly", which completely hits the core pain point of the current #AI of the most important unfair problem.

#OpenLedger (@OpenledgerHQ) is a decentralised, blockchain-based AI platform that aims to enable transparency, community governance, and open access to AI. Unlike traditional AI models controlled by big tech companies, #OpenLedger allows the community to train, validate, and create specialised AI models. The result is a fairer system where individuals who provide data and models get the credit and rewards they deserve.

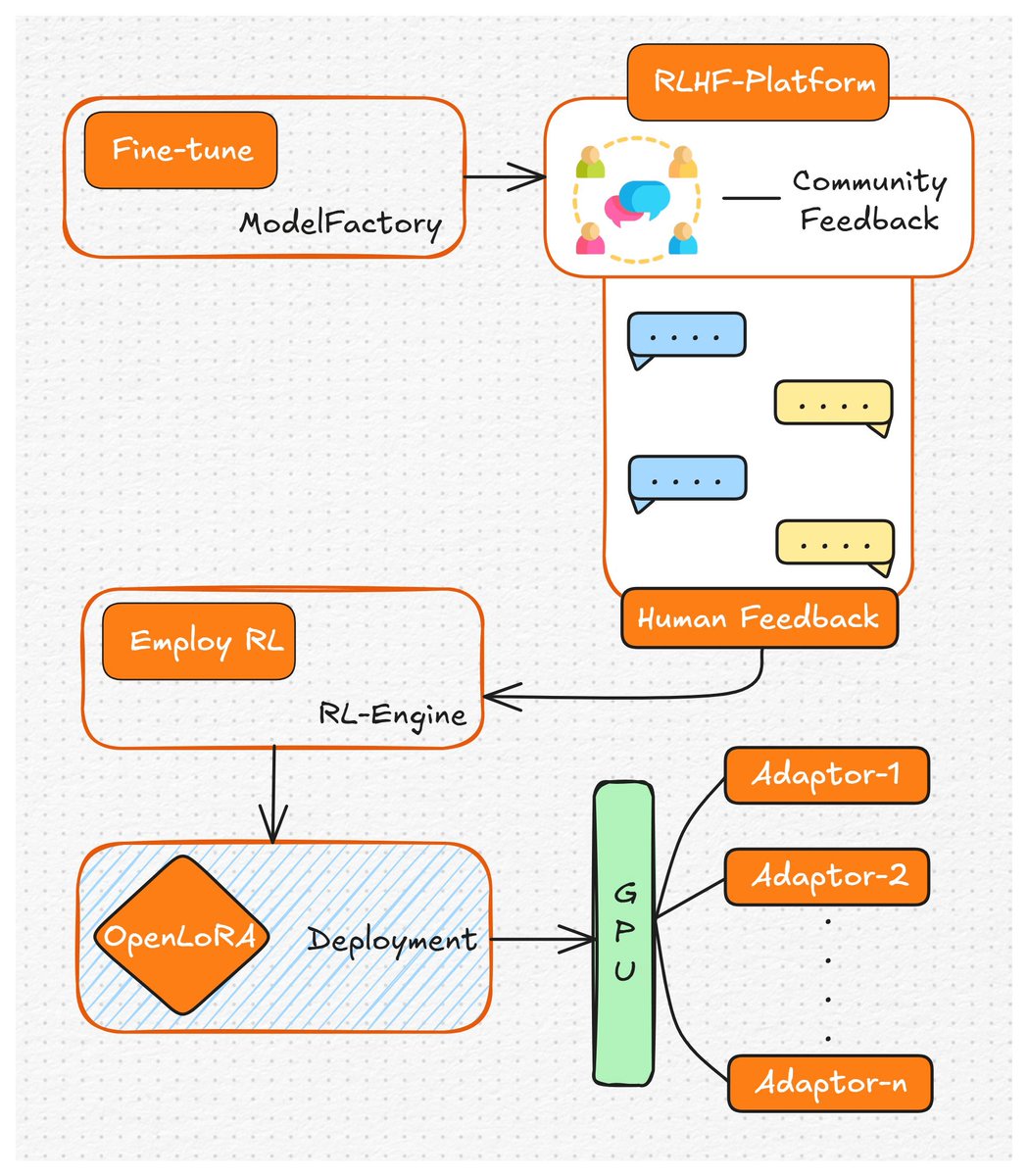

#OpenLedger architecture uses 5 core layers:

🔵 Consensus layer (based on EigenLayer secure sharing)

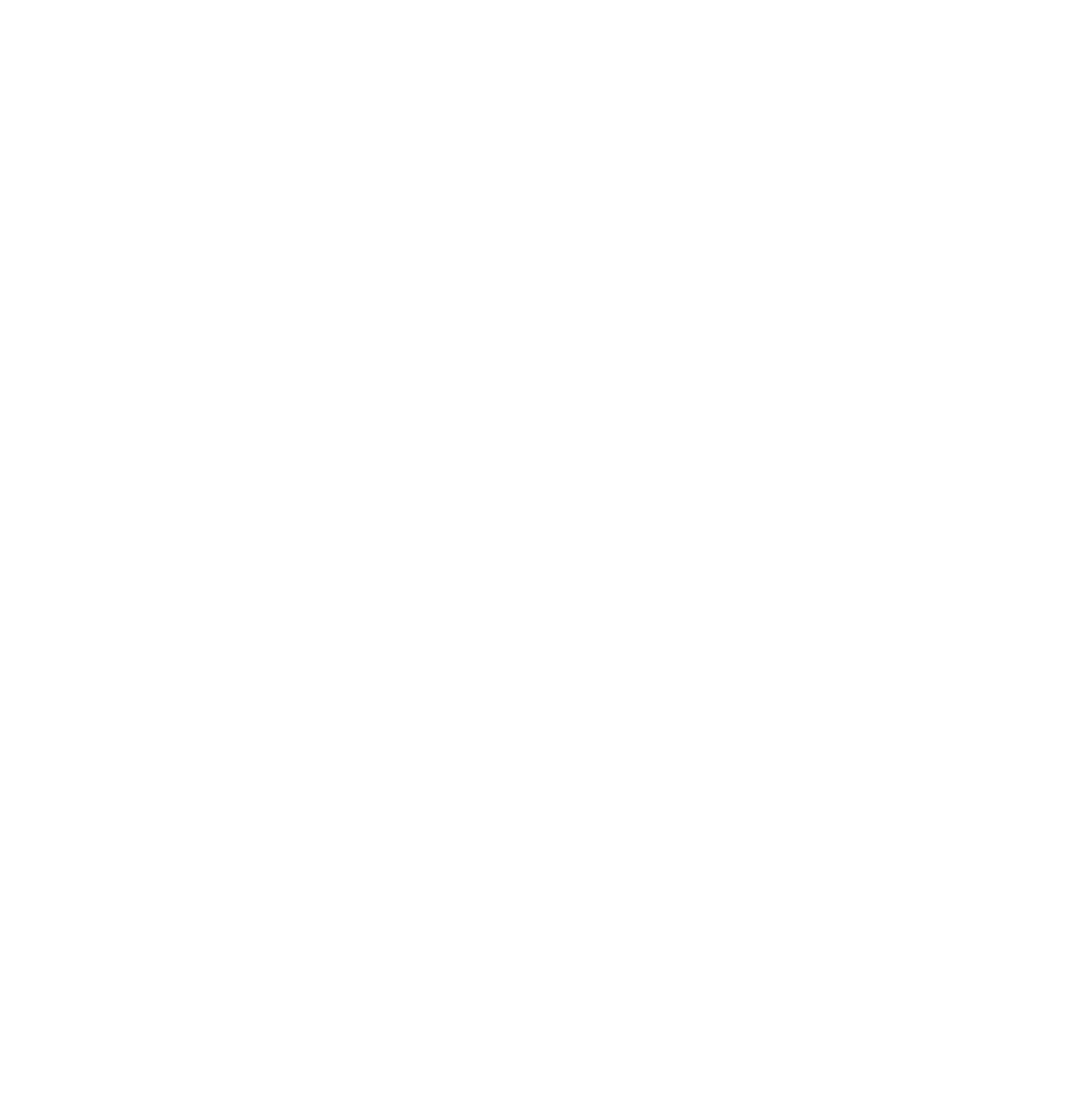

🔵 Model Runtime Layer (OpenLoRA)

🔵 Datanet + Proof of Attribution

🔵 Task Execution Layer (Task Verification and Incentives)

🔵 User Participation Layer (Plug-in, Low Threshold Entry)

There are too many technical things, we will not repeat them here, interested partners, you can read the white paper at the top of the @OpenledgerHQ homepage. For our ordinary users, we may be more concerned about the method of user engagement layer, and the main strategies are (more detailed strategies later):

• Chrome plug-in: can be used as a data collector (contribution prompts, web page data)

•Local runner: Run OpenLoRA nodes to get points, support CPU, GPU

•Contributive interactions: Upload datasets, train models, and validate others' results to form a #AI crowdsourcing community

#OpenLedger Key Benefits:

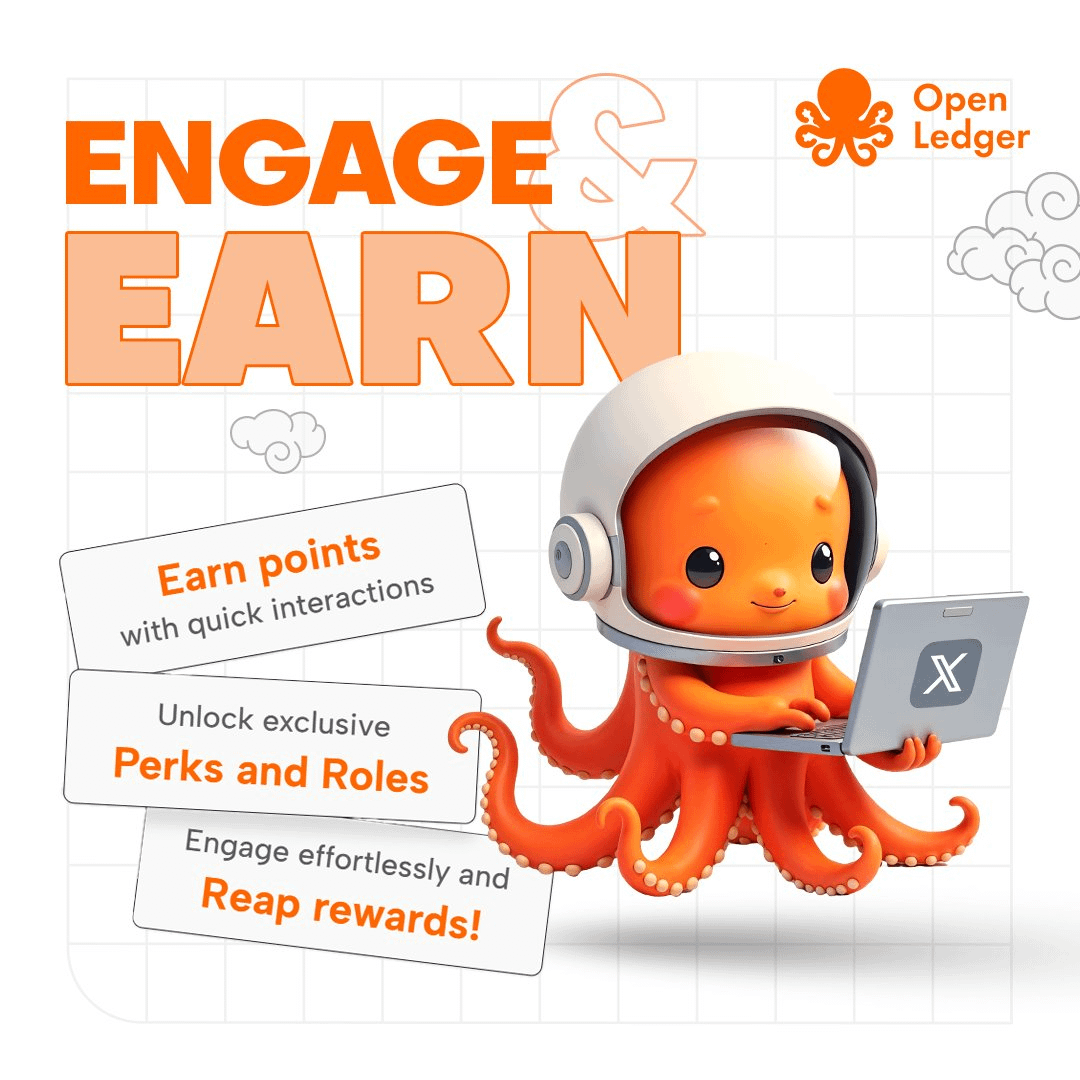

1️⃣OpenLoRA: 1 graphics card runs thousands of models

#OpenLedger most hardcore product is OpenLoRA, which is the infrastructure of the model deployment layer. It may be difficult to understand, but I'll give you an example here.

👉 For example, you now have an LLM model and have 1,000 "skill-based plugins" fine-tuned with LoRA (e.g. lawyers, doctors, fitness trainers, teachers, etc.). If you run these models in the traditional way, you need to configure 1000 graphics cards, which is ridiculously expensive.

#OpenLoRA Practices used:

•Load only one base model (e.g. Mistral)

• It takes time to dynamically load the LoRA plugin

•The video memory does not explode, the switching millisecond level, and the speed is even faster

• Save 90%+ on server costs

📌 This technology is simply the best #AI privatisation boon for small and medium-sized businesses and individuals. One LoRA per person, multi-user Copilot. In addition, for those large-scale model platforms (such as HuggingFace, Bittensor projects), it is a strong complementary partnership.

Therefore, #OpenLoRA is not a concept, but a landing product that truly solves the cost and scalability problems of AI infra, and has strong commercialisation capabilities, especially suitable for localised private deployment of small and medium-sized enterprises or individuals.

2️⃣ Data and Contribution Confirmation System: Datanet + Proof of Attribution

We know that the training of #AI is inseparable from data, which is the oil of the #AI era, computing power is the engine, and the model is the highway, and the three are closely related and closely related. In the current context, data sources have always been a legal and ethical grey area (e.g. GitHub code, Reddit posts, etc., are trained without giving contributors any benefits).

So #OpenLedger did two things:

•With Proof of Attribution, every contribution is recorded on-chain and traceable in real time, and who contributed what and how much contributed can be checked

•A decentralised data market is built using Datanet, so that data and models can be confirmed, traded, and traced like NFTs

19

28.45K

The content on this page is provided by third parties. Unless otherwise stated, OKX is not the author of the cited article(s) and does not claim any copyright in the materials. The content is provided for informational purposes only and does not represent the views of OKX. It is not intended to be an endorsement of any kind and should not be considered investment advice or a solicitation to buy or sell digital assets. To the extent generative AI is utilized to provide summaries or other information, such AI generated content may be inaccurate or inconsistent. Please read the linked article for more details and information. OKX is not responsible for content hosted on third party sites. Digital asset holdings, including stablecoins and NFTs, involve a high degree of risk and can fluctuate greatly. You should carefully consider whether trading or holding digital assets is suitable for you in light of your financial condition.